'Technology,

hailed as the means of bringing nature under the control of our intelligence,

is enabling nature to exercise intelligence over us'

(Dyson 1997).

But it is more

likely that the future of our intelligence will be influenced by developments underway in robotics. Progress in

machine intelligence will have absolutely mind-boggling effects on

our intelligence. Why?

At present we

make a distinction between two kinds of intelligence: biological or organic

intelligence, and machine or inorganic intelligence. A composite

(organic-inorganic, or man-machine) intelligence will evolve in the near future

(cf. Part 89). It appears

inevitable that, aided by human beings, an empire of inorganic life (intelligent robots) will evolve, just as biological

or organic life has evolved. We are about to enter a post-biological world, in which machine intelligence, once it has

crossed a certain threshold, will not only undergo Darwinian and Lamarckian

evolution on its own, but will do so millions of times faster than the

biological evolution we are familiar with so far. The result will be

intelligent structures with a composite, i.e. organic-inorganic or man-machine, intelligence.

Moravec's (1999) book, 'Robot:

Mere Machine to Transcendent Mind', sets out a possible scenario. He expects robots to model

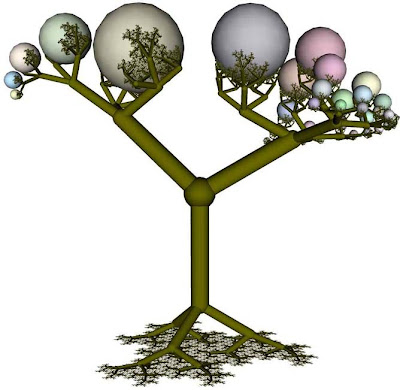

themselves on successful biological forms. One such form - used by trees, the

human circulatory system, and basket starfish - is a network of ever-finer branches: the starfish robot.

A 'bush robot' is another likely development in

Moravec's scheme of things: 'Twenty-five branchings would connect a meter-long

stem to a trillion fingers, each a thousand atoms long and able to move about a

million times per second.'

Medical applications are one among the many likely

uses of the bush robot listed by Moravec: 'The most complicated procedures

could be completed almost instantaneously by a trillion-fingered robot, able,

if necessary, to simultaneously work on almost every cell of a human body.'

When will all

this happen, and what will be its possible bearing on our intelligence?

Estimates vary widely. The most

optimistic ones are those of Kurzweil. This is what

he wrote in 1999: 'Sometime

early in the next century, the intelligence of machines will exceed that of

humans. Within several decades, machines will exhibit the full range of human

intellect, emotions and skills, ranging from musical and other creative

aptitudes to physical movement. They will claim to have feelings and, unlike

today’s virtual personalities, will be very convincing when they tell us so. By

2019 a $1,000 computer will at least match the processing power of the human

brain. By 2029 the software for intelligence will have been largely mastered,

and the average personal computer will be equivalent to 1,000 brains.'

There were

reasons for the optimism exuded by Kurzweil: 'We are already putting computers –- neural implants

-– directly into people’s brains to counteract Parkinson’s disease and tremors

from multiple sclerosis. We have cochlear implants that restore hearing. A

retinal implant is being developed in the U.S. that is intended to provide at

least some visual perception for some blind individuals, basically by replacing

certain visual-processing circuits of the brain. Recently scientists from Emory

University implanted a chip in the brain of a paralyzed stroke victim that

allows him to use his brainpower to move a cursor across a computer screen.'

In his book The Age of Spiritual Machines (1999), Kurzweil enunciated his Law of Accelerating

Returns, which simply paraphrases the occurrence of positive feedback in

the evolution of complex adaptive systems in general, and biological and artificial

evolution in particular; the law embodies exponential growth of

evolutionary complexity and sophistication: 'advances build on one another and progress erupts

at an increasingly furious pace. . . .As order exponentially increases (which reflects the essence of evolution),

the time between salient events grows shorter. Advancement speeds up. The

returns –- the valuable products of the process –- accelerate at a nonlinear

rate. The escalating growth in the price performance of computing is one

important example of such accelerating returns. . . The Law of Accelerating

Returns shows that by 2019 a $1,000 personal computer will have the processing

power of the human brain –- 20 million billion calculations per second. . . Neuroscientists

came up with this figure by taking an estimation of the number of neurons in

the brain, 100 billion, and multiplying it by 1,000 connections per neuron and

200 calculations per second per connection. By 2055, $1,000 worth of computing

will equal the processing power of all human brains on Earth (of course, I may

be off by a year or two).'

The law is similar to Moore's law, except that it

is applicable to all 'human technological advancement, the billions

of years of terrestrial evolution' and even 'the entire history of the

universe'.

Kurzweil's latest book How to Create a Mind (2012) has been summarized in considerable detail at http://newbooksinbrief.com/2012/11/27/25-a-summary-of-how-to-create-a-mind-the-secret-of-human-thought-revealed-by-ray-kurzweil/.

He puts forward a 'pattern recognition theory' for how the brain functions,

similar to Jeff Hawkins' theory published in his famous book On Intelligence: How a New Understanding of the Brain will Lead to the Creation of

Truly Intelligent Machines (2004). According to

Kurzweil, our neocortex contains 300 million very general pattern-recognition

circuits which are responsible for most aspects of human thought, and a

computer version of this design can be used to create artificial intelligence

more capable than the human brain. As computational power grows, machine

intelligence would represent an ever increasing percentage of total

intelligence on the planet. Ultimately it will lead (by 2045) to the 'Singularity',

a merger between biology and technology. 'There will be no distinction, post-Singularity,

between human and machine intelligence . . .'.

It is only a matter of time

before we merge with the intelligent machines we are creating.

Stephen

Hawking expressed the fear that humanity may destroy itself if there is a

nuclear holocaust, and suggested the escape of at least a few individuals into

outer space as a way for preserving the human race. But, for all our bravado,

our bodies are delicate stuff which can survive only in a narrow range of

temperatures and other environmental conditions. But our robots will not suffer

from that handicap, and will be able to withstand high radiation fields,

extreme temperatures, near-vacuum conditions, etc. Such robots (or even cyborgs) will be able

to communicate with one another, with the inevitable possibility of developing

distributed intelligence. And when

each such robot is already way ahead of us in intelligence, a distributed superintelligence

will emerge, capable of further evolution, of course. The ever evolving

superintelligence and knowledge will benefit each agent in the network, leading

to a snowballing effect.

Further, the

superintelligent agents may organize themselves into a hierarchy, rather like what occurs in the human neocortex. Such an

assembly would be able to see incredibly complex patterns and analogies which

escape our comprehension, leading to a dramatic increase in our knowledge and

understanding of the universe. Moravec expressed the view that this superintelligence

will advance to a level where it is more mind than matter, suffusing the entire

universe. We humans will be left far behind, and may even disappear altogether

from the cosmic scene.

An

alternative though similar picture was painted by Kurzweil (2005), envisioning

a coevolution of humans and machines via neural implants that will

enable an uploading of the human carbon-based neural circuitry into the

prevailing hardware of the intelligent machines. Humans will simply merge with

the intelligent machines. The inevitable habitation of outer space and the

further evolution of distributed intelligence will occur concomitantly. Widely

separated intelligences will communicate with one another, leading to the

emergence of an omnipresent superintelligence.

You want to

call that 'God'? Don't. That omnipresent

superintelligence would be our creation; a triumph of our science

and technology; a result of what we humans can achieve by adopting the

scientific method of interpreting data and information.

'We are the brothers and sisters of our machines' (Dyson 1997).