In Part 73 I had started introducing you to the field of

computational intelligence (CI). It has a number of subfields, and I described

one of them (fuzzy logic). Another branch of CI deals with artificial neural networks (ANNs). ANNs

attempt to mimic the human brain for carrying out computations.

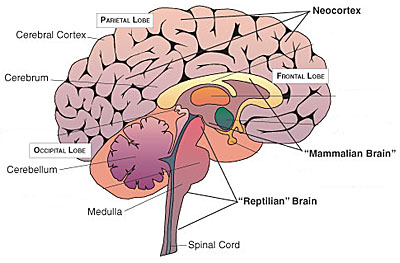

The top outer portion of the human brain, just under the scalp, is the neocortex. Almost everything we associate with intelligence occurs in the neocortex,

with important roles also played by the thalamus and the hippocampus. The human

brain has ~1011 nerve cells or neurons, loaded into the neocortex.

Most of the neurons have a pyramidal shaped central body (the nucleus). In addition, a neuron

has an axon, and a number of

branching structures called dendrites. The axon is a signal emitter, and the dendrites are

signal receivers. A connection called a synapse is established when a strand of an axon of one

neuron ‘touches’ a dendrite of another neuron. The axon of a typical neuron

(and there are 1011 of them) makes several thousand synapses. The

brain is a massively parallel computation system.

When a sensory or other pulse (‘spike’) involving a particular synapse

arrives at the axon, it causes the synaptic vesicles in the first (the 'presynaptic')

neuron to release chemicals called neurotransmitters into the gap or synaptic cleft between the

axon of the first neuron and the dendrite of the second (the 'postsynaptic')

neuron. These chemicals bind to the receptors on the dendrite, triggering a

brief local depolarization of the membrane of the postsynaptic cell. This is

described as a firing of the synapse by the presynaptic neuron.

If a synapse is made to fire repeatedly at high frequency, it becomes more

sensitive: subsequent signals make it undergo greater voltage swings or spikes.

Building up of memories amounts to formation and strengthening of synapses.

The firing of neurons follows two general rules:

1. Neurons which fire

together wire together. Connections between neurons firing together in response

to the same signal get strengthened.

2. Winner-takes-all

inhibition. When several

neighbouring neurons respond to the same input signal, the strongest or the

‘winner’ neuron will inhibit the neighbours from responding to the same signal

in future. This makes these neighbouring neurons free to respond to other types

of input signals.

McCulloch and Pitts (1943) were the first to suggest that the brain can

be modelled as a network of logical operations like AND, OR, NOT etc. Their paper presented

the first model of a neural network. The McCulloch-Pitts model was the first to

try to understand the workings of the brain as a case of information

processing. This model was also the first to demonstrate that a network of

simple logic gates can perform even complex computations (cf.

Nolfi and Floreano 2000).

In 1949 Hebb published his celebrated book, The Organization of Behaviour. The first

assumption he made was that the synaptic connections among the neurons in the

brain are constantly changing, and these synaptic changes form the basis of all

learning and memory. As a result of these self-regulated changes, even a

randomly connected neural network would soon organize itself into a pattern,

and the pattern is ever-changing in response to feedbacks and sensory inputs. A

kind of positive feedback is

operative: Frequently used synapses grow stronger, and the seldom used synapses

become weaker. The frequently used synapses eventually become so strong as to

be locked in as memories. The memory patterns are not localized; they are

widely distributed over the brain. Hebb was among the earliest to use the term

‘connectionist’ for such

distributed memories.

Hebb made a

second assumption: The selective strengthening of the synapses among the neuron

cells by the nerve impulses causes the brain to organize itself into cell assemblies. These are

subsets of several thousand neurons in which the circulating nerve impulses

reinforce themselves, and continue to circulate. They were assumed to be the basic building blocks of information.

One such block may correspond to, say, a flash of light, another to a

particular sound, a third to a portion of a concept, etc. These blocks are not

physically isolated. A neuron may belong to several such blocks simultaneously.

Because of this overlap among blocks, activation of one cell assembly or block

may trigger a response in many others bocks as well. Consequently, these blocks

rapidly organize themselves into more complex concepts, something not possible

for a block in isolation.

Hebb’s work

provided a model for the essence of thought processes.

Rochester et al. (1956) verified Hebb’s ideas by modelling on a

computer what were among the first ANNs ever. Their work was also one of the

earliest in which a computer was used not just for number crunching, but for

simulation studies. Cell assemblies and other forms of emergent behaviour were

observed on the computer screen, beginning from a random or uniform

configuration.

The term

'processing element (PE)' (or 'perceptron') is used for

the basic input-output unit of an ANN which can transform a synaptic input

signal into an output signal.

An ANN can be

made to evolve and learn. The training of an ANN amounts

to strengthening or weakening the connections (synapses) among the PEs,

depending on the extent to which the output is the desired one. There are

various approaches for doing this; e.g. supervised learning: A series of

typical inputs are given, along with the desired outputs. The ANN calculates

the root-mean-square error between the desired output and the actual output.

The connection weights (wij) among the PEs are then adjusted

to minimize this error, and the output is recalculated using the same input

data. The whole process is repeated cyclically until the connection weights

have settled to an optimum set of values.

The figure

below illustrates the idea that a neuron fires if the weighted sum of the input

exceeds a threshold value.

An important

feature of neural networks has been pointed out by Kurzweil (1998). It is the

selective destruction of information.

This is something so important that it can be regarded as the essence of all

computation and intelligence. An enormous amount of information flow occurs in

a neural network. Each neuron receives thousands of continuous signals. But

finally it either fires or does not fire, reducing all the input to a single

bit of information, namely the binary 0 or 1.

No comments:

Post a Comment